Preprocessing, Group Evaluation, Hyperparameter Optimization and Gradient Descent#

Preprocessing#

Example ordinal vs categorical#

Given the house prices dataset from the first regression example in an earlier exercise:

Give some example features for the four different data types from the lecture.

Name at least two issues in this dataset and two others that could occur when training a model.

Assume that you are given the postal code of a house - what other features could you add to the data based on that? Why do you think I ask this question?

import pandas as pd

data_houses = pd.read_csv(

"exercise_02_train.csv",

index_col="Id")

for c in data_houses.columns:

f = data_houses[c]

print("--------")

print(c)

n_unique = f.nunique()

print(" * unique:", n_unique)

print(" * NAs: ", f.isna().sum())

if (n_unique < 10):

print(" * values:", f.unique())

--------

MSSubClass

* unique: 15

* NAs: 0

--------

MSZoning

* unique: 5

* NAs: 0

* values: ['RL' 'RM' 'C (all)' 'FV' 'RH']

--------

LotFrontage

* unique: 110

* NAs: 259

--------

LotArea

* unique: 1073

* NAs: 0

--------

Street

* unique: 2

* NAs: 0

* values: ['Pave' 'Grvl']

--------

Alley

* unique: 2

* NAs: 1369

* values: [nan 'Grvl' 'Pave']

--------

LotShape

* unique: 4

* NAs: 0

* values: ['Reg' 'IR1' 'IR2' 'IR3']

--------

LandContour

* unique: 4

* NAs: 0

* values: ['Lvl' 'Bnk' 'Low' 'HLS']

--------

Utilities

* unique: 2

* NAs: 0

* values: ['AllPub' 'NoSeWa']

--------

LotConfig

* unique: 5

* NAs: 0

* values: ['Inside' 'FR2' 'Corner' 'CulDSac' 'FR3']

--------

LandSlope

* unique: 3

* NAs: 0

* values: ['Gtl' 'Mod' 'Sev']

--------

Neighborhood

* unique: 25

* NAs: 0

--------

Condition1

* unique: 9

* NAs: 0

* values: ['Norm' 'Feedr' 'PosN' 'Artery' 'RRAe' 'RRNn' 'RRAn' 'PosA' 'RRNe']

--------

Condition2

* unique: 8

* NAs: 0

* values: ['Norm' 'Artery' 'RRNn' 'Feedr' 'PosN' 'PosA' 'RRAn' 'RRAe']

--------

BldgType

* unique: 5

* NAs: 0

* values: ['1Fam' '2fmCon' 'Duplex' 'TwnhsE' 'Twnhs']

--------

HouseStyle

* unique: 8

* NAs: 0

* values: ['2Story' '1Story' '1.5Fin' '1.5Unf' 'SFoyer' 'SLvl' '2.5Unf' '2.5Fin']

--------

OverallQual

* unique: 10

* NAs: 0

--------

OverallCond

* unique: 9

* NAs: 0

* values: [5 8 6 7 4 2 3 9 1]

--------

YearBuilt

* unique: 112

* NAs: 0

--------

YearRemodAdd

* unique: 61

* NAs: 0

--------

RoofStyle

* unique: 6

* NAs: 0

* values: ['Gable' 'Hip' 'Gambrel' 'Mansard' 'Flat' 'Shed']

--------

RoofMatl

* unique: 8

* NAs: 0

* values: ['CompShg' 'WdShngl' 'Metal' 'WdShake' 'Membran' 'Tar&Grv' 'Roll'

'ClyTile']

--------

Exterior1st

* unique: 15

* NAs: 0

--------

Exterior2nd

* unique: 16

* NAs: 0

--------

MasVnrType

* unique: 3

* NAs: 872

* values: ['BrkFace' nan 'Stone' 'BrkCmn']

--------

MasVnrArea

* unique: 327

* NAs: 8

--------

ExterQual

* unique: 4

* NAs: 0

* values: ['Gd' 'TA' 'Ex' 'Fa']

--------

ExterCond

* unique: 5

* NAs: 0

* values: ['TA' 'Gd' 'Fa' 'Po' 'Ex']

--------

Foundation

* unique: 6

* NAs: 0

* values: ['PConc' 'CBlock' 'BrkTil' 'Wood' 'Slab' 'Stone']

--------

BsmtQual

* unique: 4

* NAs: 37

* values: ['Gd' 'TA' 'Ex' nan 'Fa']

--------

BsmtCond

* unique: 4

* NAs: 37

* values: ['TA' 'Gd' nan 'Fa' 'Po']

--------

BsmtExposure

* unique: 4

* NAs: 38

* values: ['No' 'Gd' 'Mn' 'Av' nan]

--------

BsmtFinType1

* unique: 6

* NAs: 37

* values: ['GLQ' 'ALQ' 'Unf' 'Rec' 'BLQ' nan 'LwQ']

--------

BsmtFinSF1

* unique: 637

* NAs: 0

--------

BsmtFinType2

* unique: 6

* NAs: 38

* values: ['Unf' 'BLQ' nan 'ALQ' 'Rec' 'LwQ' 'GLQ']

--------

BsmtFinSF2

* unique: 144

* NAs: 0

--------

BsmtUnfSF

* unique: 780

* NAs: 0

--------

TotalBsmtSF

* unique: 721

* NAs: 0

--------

Heating

* unique: 6

* NAs: 0

* values: ['GasA' 'GasW' 'Grav' 'Wall' 'OthW' 'Floor']

--------

HeatingQC

* unique: 5

* NAs: 0

* values: ['Ex' 'Gd' 'TA' 'Fa' 'Po']

--------

CentralAir

* unique: 2

* NAs: 0

* values: ['Y' 'N']

--------

Electrical

* unique: 5

* NAs: 1

* values: ['SBrkr' 'FuseF' 'FuseA' 'FuseP' 'Mix' nan]

--------

1stFlrSF

* unique: 753

* NAs: 0

--------

2ndFlrSF

* unique: 417

* NAs: 0

--------

LowQualFinSF

* unique: 24

* NAs: 0

--------

GrLivArea

* unique: 861

* NAs: 0

--------

BsmtFullBath

* unique: 4

* NAs: 0

* values: [1 0 2 3]

--------

BsmtHalfBath

* unique: 3

* NAs: 0

* values: [0 1 2]

--------

FullBath

* unique: 4

* NAs: 0

* values: [2 1 3 0]

--------

HalfBath

* unique: 3

* NAs: 0

* values: [1 0 2]

--------

BedroomAbvGr

* unique: 8

* NAs: 0

* values: [3 4 1 2 0 5 6 8]

--------

KitchenAbvGr

* unique: 4

* NAs: 0

* values: [1 2 3 0]

--------

KitchenQual

* unique: 4

* NAs: 0

* values: ['Gd' 'TA' 'Ex' 'Fa']

--------

TotRmsAbvGrd

* unique: 12

* NAs: 0

--------

Functional

* unique: 7

* NAs: 0

* values: ['Typ' 'Min1' 'Maj1' 'Min2' 'Mod' 'Maj2' 'Sev']

--------

Fireplaces

* unique: 4

* NAs: 0

* values: [0 1 2 3]

--------

FireplaceQu

* unique: 5

* NAs: 690

* values: [nan 'TA' 'Gd' 'Fa' 'Ex' 'Po']

--------

GarageType

* unique: 6

* NAs: 81

* values: ['Attchd' 'Detchd' 'BuiltIn' 'CarPort' nan 'Basment' '2Types']

--------

GarageYrBlt

* unique: 97

* NAs: 81

--------

GarageFinish

* unique: 3

* NAs: 81

* values: ['RFn' 'Unf' 'Fin' nan]

--------

GarageCars

* unique: 5

* NAs: 0

* values: [2 3 1 0 4]

--------

GarageArea

* unique: 441

* NAs: 0

--------

GarageQual

* unique: 5

* NAs: 81

* values: ['TA' 'Fa' 'Gd' nan 'Ex' 'Po']

--------

GarageCond

* unique: 5

* NAs: 81

* values: ['TA' 'Fa' nan 'Gd' 'Po' 'Ex']

--------

PavedDrive

* unique: 3

* NAs: 0

* values: ['Y' 'N' 'P']

--------

WoodDeckSF

* unique: 274

* NAs: 0

--------

OpenPorchSF

* unique: 202

* NAs: 0

--------

EnclosedPorch

* unique: 120

* NAs: 0

--------

3SsnPorch

* unique: 20

* NAs: 0

--------

ScreenPorch

* unique: 76

* NAs: 0

--------

PoolArea

* unique: 8

* NAs: 0

* values: [ 0 512 648 576 555 480 519 738]

--------

PoolQC

* unique: 3

* NAs: 1453

* values: [nan 'Ex' 'Fa' 'Gd']

--------

Fence

* unique: 4

* NAs: 1179

* values: [nan 'MnPrv' 'GdWo' 'GdPrv' 'MnWw']

--------

MiscFeature

* unique: 4

* NAs: 1406

* values: [nan 'Shed' 'Gar2' 'Othr' 'TenC']

--------

MiscVal

* unique: 21

* NAs: 0

--------

MoSold

* unique: 12

* NAs: 0

--------

YrSold

* unique: 5

* NAs: 0

* values: [2008 2007 2006 2009 2010]

--------

SaleType

* unique: 9

* NAs: 0

* values: ['WD' 'New' 'COD' 'ConLD' 'ConLI' 'CWD' 'ConLw' 'Con' 'Oth']

--------

SaleCondition

* unique: 6

* NAs: 0

* values: ['Normal' 'Abnorml' 'Partial' 'AdjLand' 'Alloca' 'Family']

--------

SalePrice

* unique: 663

* NAs: 0

Evaluation: Groups#

Let’s consider a cancer dataset. The goal is to predict the progression of the cancer (e.g., stage 1, stage 2, …). Each patient appears multiple times in the dataset. Can you randomly split the data into train and test set?

Read the documentation of

GroupShuffleSplit. What does it do? Explain what thegroupsparameter of the split method is used for!Apply

GroupShuffleSpliton the data below and examine the results. The documentation ofGroupShuffleSplitalso provides an example.Also have a look around the different splitting strategies provided by

scikit-learn.

# code to generate data (skip this)

import numpy as np

import matplotlib.pyplot as plt

rng = np.random.RandomState(1338)

cmap_data = plt.cm.Paired

cmap_cv = plt.cm.coolwarm

n_splits = 4

# Generate the class/group data

n_points = 20

X = rng.randn(n_points, 3)

y = rng.choice([0,1], n_points)

# Generate 10 uneven groups

# draw a prior for the likelihood of each group from the Dirichlet Distribution

# given that we observed elements of each group alpha_i - 1 time (here, alpha_i = 2)

group_prior = rng.dirichlet([2] * 10)

# assign a group [0,...,9] to each of the 20 points

groups = np.repeat(np.arange(10), rng.multinomial(n_points, group_prior))

from sklearn.model_selection import GroupShuffleSplit

# Task 3: use X, y, groups and apply GroupShuffleSplit

X, y, groups

X, y, groups

Hyperparameter Optimization#

What is the difference between parameters and hyperparameters of a model? Give examples!

What is hyperparameter optimization?

Name two methods for hyperparameter optimization and compare them.

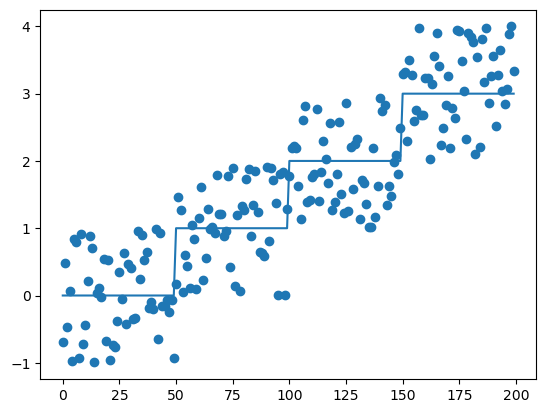

For the following dataset, we report the mean absolute error for a DecisionTreeRegressor using 10-fold cross validation via the function cross_val_score. The function’s parameters cv and scoring are used to set the number of cross validation splits and the validation metric (e.g. neg_mean_absolute_error).

Aftwerwards we use GridSearchCV to optimize the max_depth parameter in the range of 1,2,3,4 and 5 for a DecisionTreeRegressor and report the same score. For this, we run GridSearchCV with a 10-fold cross-valiation. Note the cv and param_grid parameters of GridSearchCV.

What do you think the notion of “nested cross-validation”, “inner cross-validation” and “outer cross-validation refer to in this example?

import numpy as np

import matplotlib.pyplot as plt

from sklearn.tree import DecisionTreeRegressor

from sklearn.model_selection import cross_val_score, GridSearchCV

from sklearn.metrics import mean_absolute_error

# generate X and y

np.random.seed(12)

n_steps = 4

X = np.arange(50 * n_steps).reshape((-1,1))

y_orig = np.repeat(np.arange(4), int(X.shape[0] / n_steps))

y = y_orig + (np.random.random(y_orig.size) - 0.5) * 2

# plot data

plt.scatter(X, y)

plt.plot(X, y_orig)

[<matplotlib.lines.Line2D at 0x7f745f433250>]

# normal model

model = DecisionTreeRegressor(max_depth=None)

results = cross_val_score(model, X, y, cv=10, scoring="neg_mean_absolute_error")

print(f"MAE: {abs(results.mean()):.3f} +/- {results.std():.3f}")

MAE: 0.648 +/- 0.145

# hyper parameter optimization

grid_params = {"max_depth": [1,2,3,4]}

model = GridSearchCV(DecisionTreeRegressor(), cv=10, param_grid=grid_params)

results = cross_val_score(model, X, y, cv=10, scoring="neg_mean_absolute_error")

print(f"MAE: {abs(results.mean()):.3f} +/- {results.std():.3f}")

MAE: 0.556 +/- 0.066

Gradient Descent#

Learning Rate#

Explain the tradeoff between different sizes of learning rate.

Ice Cream Example#

You are given a dataset with features \(x\) (temperature in degree Celsius) and target \(y\) (kg of ice cream sold): \(\begin{aligned} x &= (20, 5, 15, 30)^T\\ y &= (40, 10, 30, 60)^T \end{aligned}\)

Define the objective for a linear regression model to predict the sold ice cream from the temperature data.

Compute the gradients of the objective with respect to the parameters of the linear regression model and do one step of gradient descent with learning rate of \(2.5 \cdot 10^{-4}\). The initial parameters are \(\boldsymbol\beta = (0, 1)^T\).

Assume the final parameters are \(\boldsymbol\beta = (0, 2)^T\). What is the interpretation of these values?

Now, another column is added to the data, that denotes the weather (rainy/foggy/cloudy/sunny). How could you present the model this data?

What is a reason, that you might want to use SGD instead of the closed form solution to compute the parameters of a linear regression?

Deriving Gradients#

Do two steps to maximize the following function \(f\) via gradient descent. Initial input values are \((x^{(0)}, y^{(0)}) = (1, 0)\). The learning rate equals \(1\). \(f(x,y) = x^2 + e^{-xy^2}\)